MCP: How AI Models Break Free from Their Sandbox

Learn how the Model Context Protocol (MCP) enables AI models to access external tools and services, breaking free from their isolated environments to perform real-world tasks.

On this page ▾

MCP: How AI Models Break Free from Their Sandbox

Large language models (LLMs) like GPT-4 and Claude have transformed how we interact with AI. However, these powerful models have a fundamental limitation: they’re confined to their training data with no native ability to interact with the outside world. The Model Context Protocol (MCP) changes everything by creating a standardized bridge between AI models and external tools. Let’s explore how this game-changing protocol works.

What is MCP and Why Should You Care?

The Model Context Protocol (MCP) enables AI assistants to break free from their isolated environments and interact with external tools and services. This seemingly simple capability is revolutionary—allowing AI to check your GitHub PRs, access real-time data, and perform actions previously impossible within their limited context.

Think of MCP as an “API for AI models” that standardizes how they communicate with the external world.

To learn more about MCP (Model Context Protocol) standards, feel free to visit the official MCP documentation website.

The MCP Connection Flow Explained

Establishing a connection between a client application and an MCP server follows a specific sequence:

Alt text

Step 1: Establish the Event Stream Connection

First, the client opens a Server-Sent Events (SSE) connection to receive real-time updates from the server:

curl -N -H "Accept: text/event-stream" http://localhost:8080/events

Step 2: Receive the Endpoint Information

The server responds with the specific endpoint URL the client should use:

event: endpoint

data: http://localhost:8080/message?clientID=xxxxx

Step 3: Send Initialization Request

With the correct endpoint, the client sends its capabilities and information:

curl -X POST -H "Content-Type: application/json" -d \

'{

"jsonrpc": "2.0",

"id": 1,

"method": "initialize",

"params": {

"protocolVersion": "2024-11-05",

"capabilities": {

"roots": {

"listChanged": true

},

"sampling": {}

},

"clientInfo": {

"name": "ExampleClient",

"version": "1.0.0"

}

}

}' http://localhost:8080/message?clientID=xxxxx

Step 4: Receive Initialization Response

The server acknowledges and returns its own capabilities:

event: message

data: {"jsonrpc":"2.0","id":1,"result":

{"protocolVersion":"","capabilities":{"logging":{},"prompts":

{"listChanged":true},"resources":

{"listChanged":true,"subscribe":true},"tools":

{"listChanged":true}},"serverInfo":{"name":"goai-mcp-

server","version":"0.1.0"}}}

Step 5: Send Initialized Notification

Finally, the client confirms initialization is complete:

curl -X POST -H "Content-Type: application/json" -d \

'{

"jsonrpc": "2.0",

"method": "notifications/initialized"

}' http://localhost:8080/message?clientID=xxxxx

At this point, the connection is ready for use, and the client can start handling user requests.

MCP in Action: The Complete Request Flow

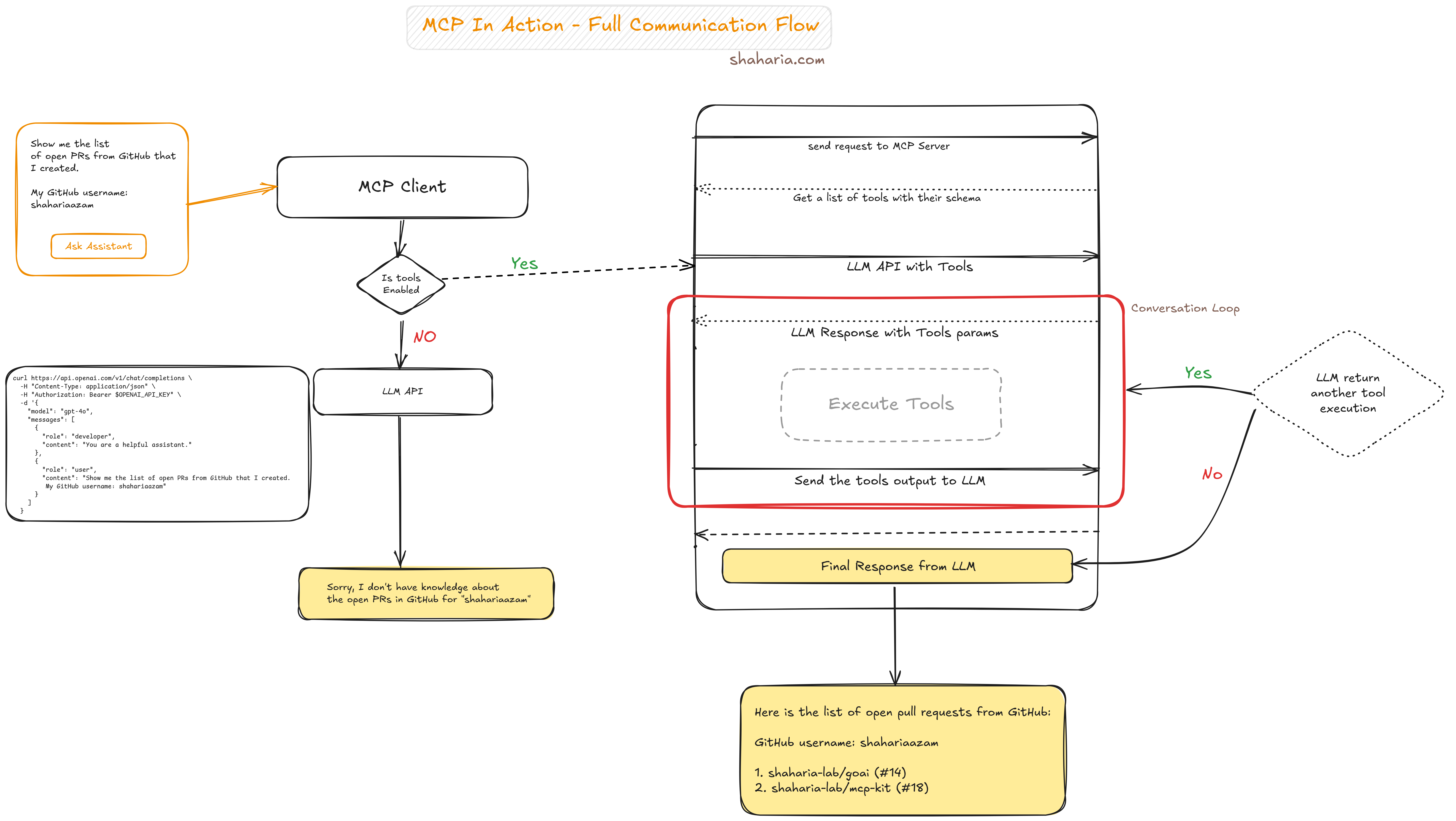

MCP client-server connection flow diagram

MCP client-server connection flow diagram

Let’s break down exactly how MCP works when a user makes a request:

Step 1: User Initiates a Request

The user interacts with the MCP client (a chatbot or similar interface) with a request like: “Show me the list of open PRs from GitHub that I created. My GitHub username: shahariaazam”

Step 2: Client Requests Available Tools

The MCP client requests the list of available tools from the MCP server.

Step 3: Tools Information Sent to LLM

The client sends the tools’ schema, names, and descriptions to the LLM API along with the user’s query.

Step 4: LLM Decides on Tool Usage

The LLM analyzes the request and determines:

- If any tools are needed to fulfill the request

- Which specific tools are required

- What parameters are needed for tool execution

Step 5: LLM Returns Tool Parameters

The LLM responds with the necessary parameters to execute the required tools.

Step 6: Client Calls the Tools

The MCP client sends these parameters to the MCP server using the “tools/call” method.

Step 7: Server Executes the Tools

The MCP server executes the requested tools (in this case, fetching GitHub PR data) and returns the results to the client.

Step 8: Results Sent Back to LLM

The MCP client forwards the tool execution results back to the LLM.

Step 9: Final Response Generation

The LLM generates a final response based on the tool results. If additional tool executions are needed, the process loops back to step 5 until all required information is gathered.

Step 10: Response Delivered to User

The user receives the complete response: “Here is the list of open pull requests from GitHub: GitHub username: shahariaazam 1. shaharia-lab/goai (#14) 2. shaharia-lab/mcp-kit (#18)”

This conversation loop demonstrates how MCP enables a seamless interaction between the user, AI model, and external tools.

The Technical Foundation

MCP builds on established web technologies:

- JSON-RPC 2.0 for structured communication

- Server-Sent Events (SSE) for real-time updates

- RESTful HTTP endpoints for resource management

This makes it accessible to developers while providing the robust infrastructure needed for AI-tool communication.

The Future of AI Tool Integration

MCP represents the beginning of a new era where AI assistants can:

- Access real-time information

- Perform actions on your behalf

- Integrate with any compatible service

- Chain multiple tools together to solve complex problems

As the protocol evolves, we’ll likely see an ecosystem of MCP-compatible tools emerge, similar to how API marketplaces transformed web development.

Key Benefits for Developers and Users

For developers, MCP offers:

- A standardized way to make tools accessible to AI models

- Reduced implementation complexity

- Greater interoperability between AI systems

For users, the benefits include:

- More capable AI assistants

- Reduced hallucinations through access to verified data

- AI that can take actions rather than just provide information

Getting Started with MCP

If you’re interested in implementing MCP in your applications, the first step is understanding the connection flow outlined above. From there, you can explore how to define tools, handle requests, and process responses according to the protocol specifications.